A/B testing in Meta ads can increase conversion rates by 50% or more compared to campaigns without testing. Yet many advertisers launch ads without measuring which elements drive results, wasting thousands in monthly ad spend.

By testing variations in headlines, images, and audience targeting, marketers can cut cost per conversion in half while doubling campaign performance. Data-driven insights replace guesswork, ensuring every dollar spent works harder.

This guide explains what A/B testing is and why it’s essential for maximizing Meta campaign results in 2025.

What is A/B Testing in Paid Social Advertising?

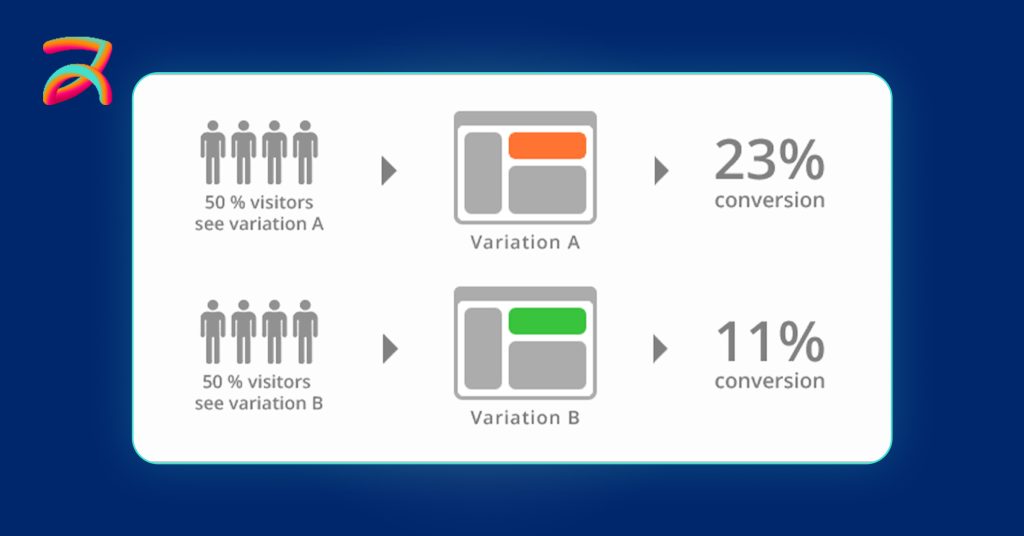

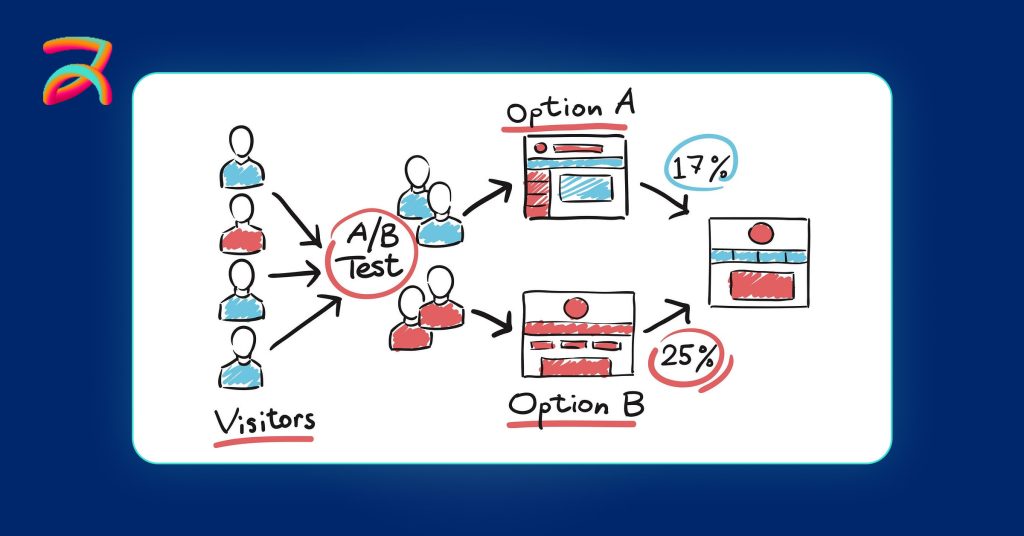

A/B testing is a method where you run two versions of an advertisement simultaneously to determine which performs better. In Meta Ads, this involves creating two identical campaigns except for one variable, such as the headline, image, audience targeting, or call-to-action button. This is something any social media marketing strategy can benefit from.

The process works by splitting your audience randomly between the two ad versions (Version A and Version B). Meta’s platform tracks key metrics like click-through rates, conversions, and cost per result for each version. After collecting enough data, you can identify which variation delivers superior performance.

A/B testing eliminates guesswork in advertising decisions. Instead of assuming what works, you get concrete data showing which elements drive better results for your specific audience and campaign objectives.

Why A/B Testing Matters More Than Ever in 2025

A/B testing matters because it directly reduces advertising costs while improving campaign performance. Companies using systematic testing spend 37% less per conversion and achieve 30-50% higher click-through-rates compared to those running static campaigns.

The competitive landscape has intensified with over 10 million active advertisers on Meta’s platform. Without testing, advertisers rely on assumptions that often lead to wasted budget and poor performance. A/B testing provides concrete data to make profitable decisions.

Meta’s automation tools, like Advantage+ Shopping and dynamic creative optimization, require quality inputs to function effectively. A/B testing identifies winning creative elements, audience segments, and messaging that these AI systems can then scale automatically across your campaigns.

🎯 Ready to Boost Your Ad Performance with A/B Testing?

Discover which headlines, creatives, and audiences deliver the best results. Optimize your budget, lower your CPC, and increase conversions it all starts with testing smarter.

Start Testing Smarter Now →What Can You Test in Meta Ads?

Meta Ads allow you to test multiple campaign elements to identify what drives the best results. The most impactful elements to test include:

- Ad Copy Length: Short vs. Long

- Call-To-Action Buttons: Learn More vs. Shop Now

- Creative Formats: Static images vs. Video

- Headline Approaches: Emotion-Driven vs. Benefit-Focused

Testing these elements systematically reveals which combinations work best for your specific audience and objectives. Based on recent studies and observations in 2025, video content is reported to generate significantly higher engagement than static images in a majority of cases, while benefit-driven headlines often outperform emotional appeals in B2B campaigns.

The key to successful testing lies in changing only one element at a time to isolate what impacts performance. When it comes to creating high-converting Instagram Ads, these testing principles become even more critical given the platform’s visual-first nature and younger demographic.

Why Meta Ads Are Perfect for A/B Testing & The Benefits You Can Expect

Meta’s advertising ecosystem gives you a unique testing environment that few other platforms can match. With billions of active users across Facebook, Instagram, Messenger, and Audience Network, you have access to diverse audience segments that can produce statistically reliable results.

This variety allows you to see how different creative, targeting, or messaging performs in real-world scenarios before scaling your investment.

Key platform strengths for A/B testing include:

- Massive Audience Reach: Billions of users let you split campaigns into meaningful test groups without sacrificing statistical accuracy.

- Advanced Audience Segmentation: Target by demographics, interests, behaviors, or custom data to understand how different customer types respond.

- Multiple Ad Formats: Experiment with static images, short-form videos, carousels, or immersive Stories to see what drives engagement in each placement. If you’re experimenting with short-form vertical content, Facebook Reels Strategies can give you ideas that fit naturally into your testing process.

- Cross-Platform Placements: Test the same creative in Feed, Stories, Reels, or In-Stream Video to measure performance differences.

- Native Testing Tools: Meta’s Experiments Tool lets you duplicate ad sets, change one variable, and split budgets evenly to ensure clean, unbiased results.

By leveraging these capabilities, advertisers can pinpoint exactly which elements resonate with their audience and feed those winning combinations into Meta’s AI optimization tools for even stronger results.

Business Impact of A/B Testing on Meta Ads

Consistent, structured testing delivers measurable improvements in campaign performance. Instead of guessing, you base decisions on real user behavior, which compounds results over time.

| Benefit | Why It Matters |

| Higher CTR | Identifies the creatives and messages that grab attention, leading to more clicks and traffic. |

| Better CVR | Reveals which combinations convert visitors into buyers, subscribers, or leads. |

| Lower CPL | Eliminates waste by focusing the budget on ads that produce results efficiently. One test even achieved a 66% drop in cost per lead. |

| Stronger ROAS | Maximizes revenue per dollar spent by scaling only high-performing ads. |

For example, structured tests often uncover differences you wouldn’t expect, such as a 15-second video outperforming a static image by 30% more conversions with the same audience and budget. These insights can translate into significantly higher returns without increasing your spend.

What to Prioritize When Testing Meta Ads

The most effective testing strategy focuses on two main areas: creative elements and ad settings, while changing only one variable at a time to get clear, actionable results.

Creative Elements to Test:

- Primary Text: Short, direct messaging vs. longer, storytelling content

- Headlines: Emotional appeal vs. benefit-focused or action-driven headlines

- Visuals: Static images, short-form videos, carousels, or UGC-style content

- Calls-To-Action: Variations such as “Learn More,” “Shop Now,” or “Get Offer”

Ad Settings to Test:

- Placements: Compare performance in Feed, Stories, Reels, and In-Stream Video

- Objectives: Test traffic, lead generation, or conversion goals to see which aligns with your audience’s readiness to act

- Audience Types: Experiment with lookalike audiences, interest-based targeting, or custom segments

When done systematically, these tests help you uncover not just what works, but why it works, giving you a roadmap for scaling future campaigns with confidence.

Step-by-Step A/B Testing Framework for Meta Ads

A winning Meta Ads campaign isn’t just about great creative. It’s about validating your ideas with structured experiments. A well-planned test removes uncertainty, reveals patterns in user behavior, and helps you scale with confidence.

The framework below breaks the process into clear, actionable steps you can follow for consistent improvements.

Define Your Hypothesis

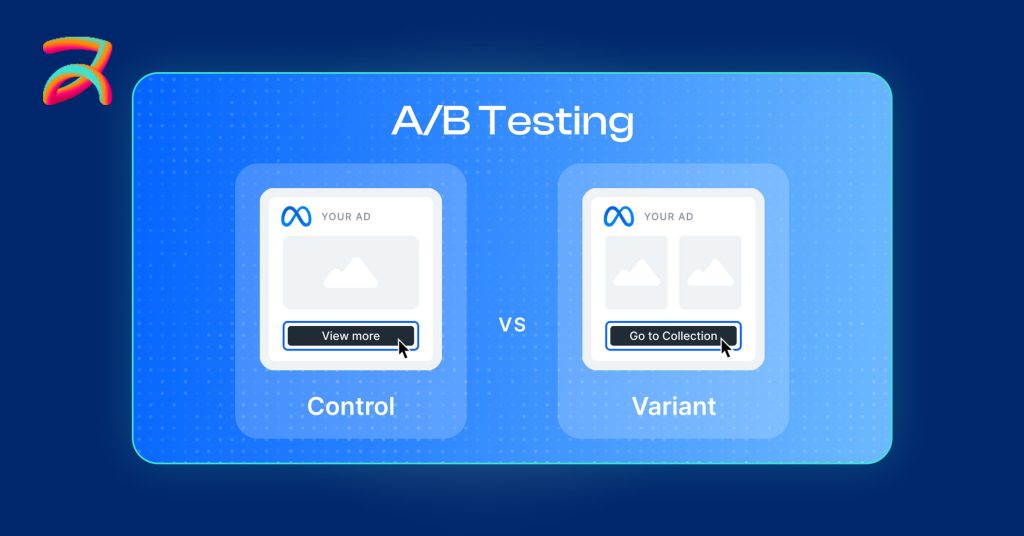

Start with a clear goal instead of random testing. State the change you’re making and the result you expect. Example: Changing the CTA from “Learn More” to “Shop Now” will increase conversions by 20%.

Choose One Variable

Test a single element at a time so results are reliable. You might test:

- Primary text

- Headlines

- Visuals (image vs. video)

- CTA buttons

- Placement or audience type

Set Up Control and Variant Ads:

Create two identical ad sets in Meta Ads Manager, changing only the chosen variable. Run them simultaneously and target similar audiences for fairness.

Allocate Enough Budget:

Aim for at least $20 per ad set per day to exit the learning phase and collect accurate data. Too little budget can lead to misleading results.

Run for 7–14 Days:

Give the test at least 7 days (up to 14 for larger audiences) so Meta’s algorithm can stabilize. Use tools like the Optimizely A/B Test Duration Calculator to determine the ideal timeframe.

Analyze and Apply:

Review results based on your original goal, such as CTR, conversion rate, cost per result, or ROAS. Apply the winning version across other campaigns, or test a new variable if results are inconclusive.

Common A/B Testing Mistakes and How to Fix Them

Even well-planned A/B tests can fail to deliver meaningful results if key mistakes are made during setup or analysis. Recognizing these pitfalls early will help you avoid wasted spend and unreliable data.

Testing Multiple Variables at Once

Changing the image, headline, and CTA in a single test makes it impossible to know which change drove the results. Common Meta Ads mistakes to avoid include testing multiple elements together, which muddies your data. Test one variable at a time for clean, actionable insights, and run tests in sequence if you want to improve several elements.

Ending Tests Too Early

Stopping a test before it has enough data can lead to misleading conclusions. Ads need time to exit the learning phase and produce statistically valid results.

Ending too soon often results in picking the wrong winner. A good rule is to run tests for at least seven full days, or longer if the audience is small or the budget is limited.

Focusing on the Wrong Metrics

A high CTR does not always mean your ad is converting. Success should be measured against the campaign’s main goal, such as cost per conversion or return on ad spend, instead of vanity metrics. Shifting focus to metrics that truly impact revenue ensures your optimizations lead to actual business growth.

A/B Testing Ad Placements: Reels, Stories, Feed

Placement impacts how your ad looks, how people engage with it, and how well it converts. Testing helps you find where your ads perform best so you can focus your budget there.

Why Placement Testing Matters in Meta Ads

An ad that performs well in Facebook Feed may lag in Instagram Stories. Ad performance varies by placement. Testing shows where your ads get the best results, helping you focus your budget on the highest-return channels.

When to test placements:

- Launching a new campaign and need to find the top-performing channel

- Seeing uneven results across current placements

- Matching different creative formats to the most effective environment

How to test:

- Create two identical ad sets, changing only the placement (e.g., one in Reels, one in Stories)

- Use the same copy, audience, and budget

- Track key metrics like cost per click, cost per conversion, and ROAS

Short-form video often outperforms static content in Reels and Stories, driving 2-8× more engagement.

Budgeting for A/B Testing Campaigns

Without enough budget, your tests can’t produce accurate results. Aim for a spend that lets Meta’s algorithm optimize and deliver stable performance data. So, understanding the cost to run Meta ads can help you balance spending with meaningful results.

Recommended Daily Test Spend

- Minimum: $20 per ad set per day

- For higher-cost objectives (conversions, lead generation): $30+ per ad set per day

- More budget = faster, more reliable results

Budgeting Across the Funnel: TOFU, MOFU, BOFU

Your testing budget should also reflect your marketing funnel stage. Awareness campaigns at the top of the funnel generally cost less per result, while conversion-focused campaigns at the bottom require more investment but often deliver the highest return.

| Funnel Stage | Goal | Suggested Test Budget |

| TOFU | Awareness and reach | $20–$40 per ad |

| MOFU | Engagement and leads | $30–$60 per ad |

| BOFU | Conversions and sales | $50–$100 per ad |

By matching your spend to both funnel stage and campaign goals, you ensure that every test has the resources it needs to produce actionable, profitable insights.

Tools for Meta A/B Testing

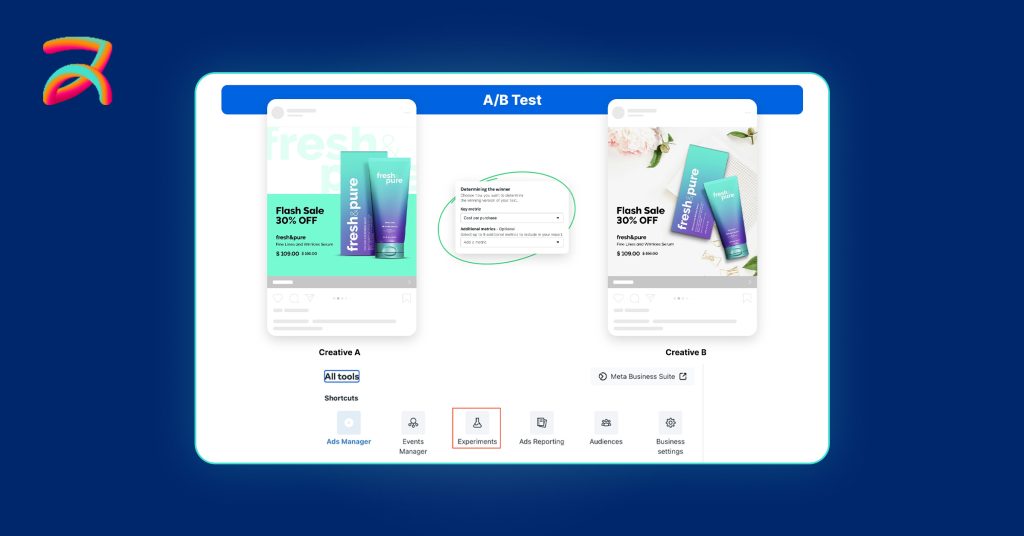

Effective A/B testing relies on the right tools to manage experiments, track performance, and make data-driven adjustments. Meta offers powerful built-in options, and third-party platforms can expand your capabilities when you need extra flexibility.

Native Tools Inside Meta Ads Manager

Meta’s built-in features make it simple to set up and run clean, structured tests without leaving the platform:

- A/B Test Tool: Allows you to duplicate an ad set or campaign and test one variable at a time

- Experiments Tool: Provides advanced testing options with clean test-control environments

- Dynamic Creative: Automatically mixes and matches headlines, visuals, and text to find the best-performing combinations

These integrate directly with Meta’s reporting dashboard, making results easy to track and compare.

Third-Party A/B Testing Platforms

While Meta’s native tools are powerful, some advertisers prefer external platforms for more advanced automation, bulk testing, or client reporting features.

Here are two popular third-party options:

- AdEspresso: A user-friendly platform that simplifies A/B testing across Facebook and Instagram ads. It provides detailed reporting, automatic optimization suggestions, and easy-to-set-up experiments

- Revealbot: A more advanced tool suited for agencies and power users who need custom automation rules, advanced split testing, and cross-platform campaign control

These tools can save time, reduce manual work, and help you stay consistent with your testing process.

How to Interpret Results and Scale Winners

If a variation has delivered strong results, you can plan to scale it in a controlled way. If it underperformed, the focus should shift to identifying what can be improved before testing again. This step is about turning test outcomes into clear, data-backed actions that move your campaign forward.

What Metrics to Prioritize

Once your A/B test ends, focus on metrics that match your campaign goals. Tracking the wrong numbers can lead to wasted spend.

Here are the key metrics to monitor:

- Return on Ad Spend (ROAS): reveals how much revenue was generated for every dollar spent

- Conversion Rate (CVR): measures how many people took the desired action after clicking your ad

- Frequency: shows how often your ad was shown to the same user, helping you spot ad fatigue

- Click-Through Rate (CTR): tells you how effective your creative is at getting attention, but it should always be viewed alongside conversion performance

For example, an ad with a high CTR but low CVR might look good at first, but it’s likely not converting your audience. On the other hand, a lower CTR with a strong ROAS could be more valuable for your bottom line.

When to Scale and When to Pause

If one variation consistently outperforms the other, it’s a clear sign it’s ready to scale. Increase the budget by 20 to 30 percent every few days so Meta’s algorithm can adjust without restarting the learning phase.

Avoid making large budget jumps, as these often cause unstable results and unpredictable performance.

If neither variation meets your goals, pause the test and review possible problem areas such as the creative, targeting, offer, or placement. Use what you discover to design a stronger test for the next round.

Winning variations can also be applied beyond the original campaign. For example, you can use them in retargeting with Meta Ads to re-engage people who have already shown interest in your brand.

🚀 Ready to Maximize Your Meta Ads Results?

A/B testing reveals the winning headlines, creatives, and audiences that deliver more conversions for less cost. Stop guessing start optimizing every campaign with data-backed decisions.

Start Your First A/B Test Today →Real-World Use Case: Agency-Run A/B Test

A real-life example shows just how impactful structured A/B testing can be when done correctly. In a campaign run by a professional Meta Ads agency, the cost per lead was reduced dramatically through a simple testing strategy.

The agency tested different combinations of ad copy, visuals, and CTA buttons across multiple placements. Rather than changing everything at once, they focused on one variable at a time and used a consistent testing cycle.

This success didn’t happen overnight. The success was driven by:

- Testing one variable at a time

- Allowing each test to run for at least seven days

- Scaling only the best-performing version

- Applying learnings across future campaigns

Working with Meta Ads Agency gives you access to industry expertise, advanced tools, and proven testing frameworks. If you want experts who know how to run and scale winning A/B tests, partnering with professionals can help you reach your goals faster and with less wasted budget.

B2B vs B2C A/B Testing on Meta

While the core A/B testing process is similar, strategies for B2B and B2C differ due to audience behavior, sales cycles, and creative needs.

| Factor | B2B Approach | B2C Approach |

| Conversion Windows | Longer sales cycles require extended tracking to capture delayed purchase decisions | Shorter buying journeys allow quicker evaluation of results |

| Audience Targeting | Narrower segments (e.g., industry decision-makers) often mean slower data collection and higher cost per lead | Broader demographics enable faster testing and quicker insights |

| Creative Approach | Longer, detailed copy that addresses business challenges and ROI | Short, emotionally driven messaging focused on lifestyle and benefits |

Local Business A/B Testing Strategy

Local businesses can boost Meta Ads performance by tailoring tests to specific locations.

Geo-Targeting Tests

- Compare ads targeting a single city vs. a wider region.

- Test radius targeting to measure the impact of proximity on conversions.

Location-Based Personalization

- Use neighborhood names, local events, or city-specific offers in ad copy.

- Align visuals and promotions with the local culture for higher engagement.

When to Hire an Agency for A/B Testing

While starting with in-house testing works for many businesses, there comes a stage where bringing in outside expertise can speed things up and take the guesswork out of optimization. An experienced agency can help you design smarter tests, spot patterns faster, and make sure your budget is going toward what works.

Signs You Need Professional Support

- Tests keep giving inconsistent or unclear results

- There’s not enough time or resources in-house to run and analyze tests properly

- Cost per lead or sale stays high despite ongoing testing

- It’s unclear which metrics should guide scaling decisions

- You want to try advanced methods like multi-variant or cross-platform testing

If this sounds familiar, it might be time to work with experts who live and breathe Meta advertising. Knowing why you should hire a Meta Ads agency can help you see how a dedicated team can manage A/B tests, uncover winning combinations, and turn insights into more sales at a lower cost.

Conclusion: A/B Test Smarter, Scale Faster

A/B testing in Meta Ads is a powerful way to optimize campaigns, improve performance, and maximize ROI. By focusing on one variable at a time, allowing tests to run until results are significant, and monitoring the right metrics, you can make informed decisions that deliver real impact.

This approach helps reduce costs, increase conversions, and scale winning campaigns with confidence. Consistent testing, learning from past results, and applying those insights to future campaigns will ensure your Meta Ads strategy stays competitive and effective.

FAQs

A/B testing is when you run two versions of an ad that differ in just one element, like the headline or image, to see which performs better. This helps you make decisions based on data instead of guesswork.

It lets you find what works for your audience and cut out what doesn’t. This means higher engagement, lower costs, and more conversions without wasting budget.

Most tests need at least 7–14 days to gather enough data for a reliable result. Ending too early can lead to wrong conclusions and poor decisions.

You can test ad copy length, headlines, images, videos, calls-to-action, audience targeting, and placements. The key is to change only one thing at a time so that results are accurate.

Match your evaluation metrics to your campaign goal. For example, if sales are your goal, focus on ROAS and conversion rate, not just click-through rate.

Increase by 20–30% every few days. This gives Meta’s algorithm time to adjust without resetting the learning phase and hurting performance.

Pause the test and review your creative, targeting, offer, and placements. Use what you learn to design a stronger variation for the next test.

Yes, winning ads often perform well in other stages of your funnel, especially in retargeting with Meta Ads to re-engage warm audiences.

Yes. B2B usually requires longer conversion windows, niche targeting, and detailed copy, while B2C benefits from short, emotional messaging and faster testing cycles.

If your results are inconsistent, costs stay high, or you lack time and tools to run proper tests, an agency can bring expertise and proven frameworks to improve performance.